- Sphere Engine overview

- Compilers

- Overview

- API

- Widgets

- Resources

- Problems

- Overview

- API

- Widgets

- Handbook

- Resources

- Containers

- Overview

- Glossary

- API

- Workspaces

- Handbook

- Resources

- RESOURCES

- Programming languages

- Modules comparison

- Webhooks

- Infrastructure management

- API changelog

- FAQ

This is an overview for the Sphere Engine Containers utilities. Sphere Engine Containers provides a toolkit designed for enhancing the project development process. Most of the advanced features of the package are encapsulated in versatile and powerful one-liners.

In this overview we cover a brief description of the toolkit components along with simple examples.

What is included in the toolkit?

The toolkit delivers utilities for managing scenario stages. Here is the summary composition of currently available tools:

Evaluator- for carrying out the whole evaluation process - from combining partial results up to the final result and the summary; it is composed as a chain of support tools (that can also be used independently):Validator- for checking validity of intermediate results,Test Result Converter- for unifying intermediate results of the execution into the common format,Grader- for establishing the final result,

Scenario Result- for manual management of the executions's final result,Stage- for accessing an outcome of particular stages,Environment- for accessing various constants, parameters, paths, names, URLs and more,Debug- for debugging procedures

How is the toolkit delivered?

The base version of the toolkit is delivered as a Python package of the name se_utils. It is available for your Python

scripts within Sphere Engine Containers projects.

Usage example for Python:

from se_utils.evaluator import UnitTestsEvaluator

from se_utils.converter import XUnitConverter

# evaluate using unit tests but provide a custom XML report path

UnitTestsEvaluator(

converter=XUnitConverter(ut_report_path='/home/user/workspace/my_report.xml'),

).run()Alternatively, for many straightforward use cases, there is also a command line interface (CLI) wrapper for most

se_utils package functionalities. The CLI wrapper is globally accessible within Sphere Engine Containers projects as

a shell script of the name se_utils_cli.

Usage example for CLI:

#!/bin/bash

# get output from the run stage

RUN_STDOUT=$(se_utils_cli stage run stdout)

# check whether the output contains expected string

if [[ "$RUN_STDOUT" =~ "Hello Sphere Engine!" ]]; then

# if yes, mark scenario execution as successful

se_utils_cli scenario_result status "OK"

else

# otherwise, mark scenario as unsuccessful

se_utils_cli scenario_result status "FAIL"

fiToolkit utilities description

Here, we will discuss each toolkit utility in detail. Observe that each section is supplemented with Python and CLI tool examples. While their specification has not yet been discussed, they can still be handy and temporarily treated as pseudocode (but they are not - they are indeed that straightforward!).

You can also go directly to the technical reference documents:

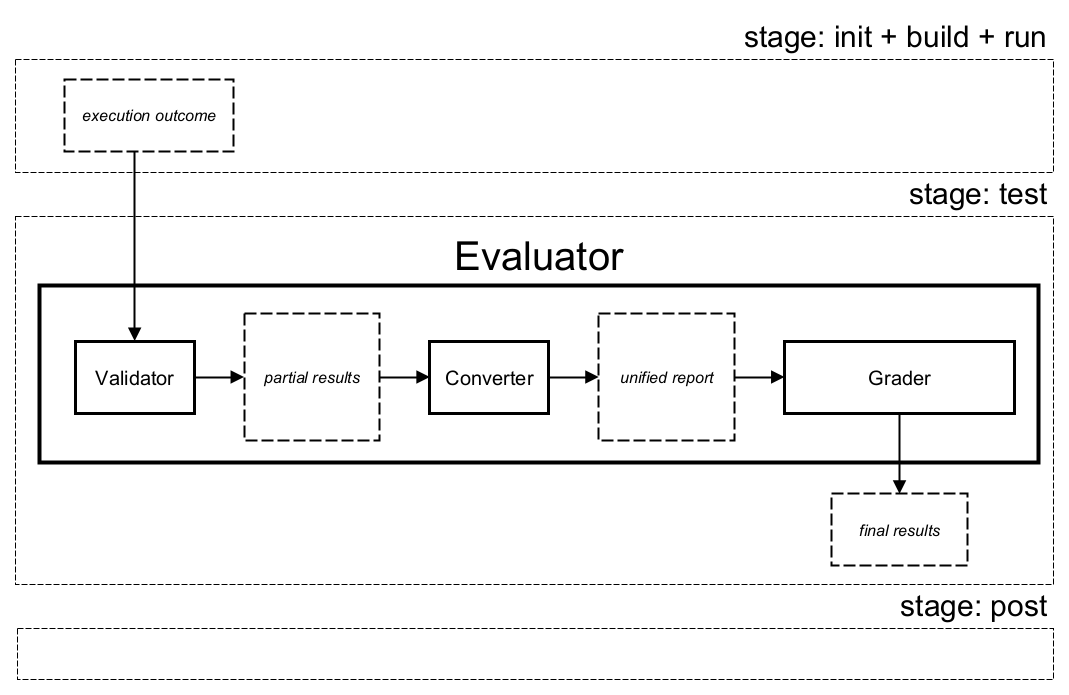

Evaluator

The Evaluator utility carries out the whole evaluation process taken in the test stage of the scenario. It combines

all necessary steps:

- validation of the

runstage outcome (Validator sub-utility), - aggregation of the partial results (Converter sub-utility),

- final assessment (Grader sub-utility).

The diagram below presents the role and place of the Evaluator in the scenario execution process.

The final results of the evaluation process include, but are not limited to:

- final

status(e.g.,OK,FAIL,Build error (BE), and more), - final

score, - final execution

time, - (optional) tests report,

- stage results for each executed stage (i.e.,

init,build,run,test,post):- execution

time, exit code,signal,- output data,

- error stream data.

- execution

Python example:

from se_utils.evaluator import UnitTestsEvaluator

UnitTestsEvaluator().run()The example above presents a built-in Evaluator preconfigured to handle one of the typical scenarios, mainly:

- project has unit tests,

- unit tests are executed during the

runstage, - XML report compatible with the

xUnitschema is produced,- and it is stored in the default place (i.e.,

se_utils.environment.path.unit_tests_report),

- and it is stored in the default place (i.e.,

- the final result depends on the correctness of unit tests:

- a final

scoreis a percentage of passed unit tests, - a final

statusis positive (OK) if at last one unit tests passes, otherwise it is negative (FAIL).

- a final

The same result can be achieved by manual configuration of the Evaluator.

Python example:

from se_utils.evaluator import Evaluator

from se_utils.validator import XUnitValidator

from se_utils.converter import XUnitConverter

from se_utils.grader import PercentOfSolvedTestsGrader

from se_utils import environment

Evaluator(

validator=XUnitValidator(ut_report_path=environment.path.unit_tests_report),

converter=XUnitConverter(ut_report_path=environment.path.unit_tests_report),

grader=PercentOfSolvedTestsGrader,

).run()The same can be achieved with CLI tool. First, let's see the equivalent of the preconfigured variant.

CLI tool example:

#!/bin/bash

se_utils_cli evaluate UnitTestsEvaluatorThe following example presents the CLI tool variant with manual configuration.

CLI tool example:

#!/bin/bash

se_utils_cli evaluate Evaluator \

--validator "XUnitValidator" \

--converter "XUnitConverter" \

--grader "PercentOfSolvedTestsGrader"Validator

The Validator utility is responsible for checking a validity of intermediate results usually obtained during the

run stage of the scenario's execution. It is rather incorporated as a sub-utility of the Evaluator, but it can also

be used independently, if necessary.

Python example:

from se_utils.validator import IgnoreExtraWhitespacesValidator

from se_utils.result import ScenarioResult

path_user_output = '/home/user/workspace/out.txt'

path_model_output = '/home/user/workspace/.sphere-engine/out.txt'

validator = IgnoreExtraWhitespacesValidator(path_user_output, path_model_output)

validation_output = validator.validate()

ScenarioResult.set(ScenarioResult.Status(validation_output['status']))Note that while you can run validator using CLI tool, the usage is limited to default paths that has been configured in the Python example above. To reproduce the same behavior with CLI you need to copy necessary files into the default location.

CLI tool example:

#!/bin/bash

DEFAULT_USER_OUTPUT_PATH="$SE_PATH_STAGE_RUN_STDOUT"

DEFAULT_TEST_OUTPUT_PATH="$SE_PATH_TEST_CASES/0.out"

USER_OUTPUT_PATH="~/workspace/out.txt"

TEST_OUTPUT_PATH="~/workspace/.sphere-engine/out.txt"

# copy output files to the default locations

cp $USER_OUTPUT_PATH $DEFAULT_USER_OUTPUT_PATH

cp $TEST_OUTPUT_PATH $DEFAULT_TEST_OUTPUT_PATH

validation_output="$(se_utils_cli validate IgnoreExtraWhitespacesValidator)"

# here you have to interpret validation outputTest Result Converter

The Test Result Converter utility is responsible for unifying intermediate results obtained during the run stage of

the scenarios's execution (and potentially also outcome of validation process). Similarly to the Validator, it is

usually used as a sub-utility of the Evaluator. It can also be used independently, if necessary.

The main task of the Test Result Converter is to produce a final tests report that can be later consumed by the Grader.

The final tests report is also available as one of the streams produced during scenario's execution.

The technical reference covering a specification of the final tests report is covered in detail in a separate document.

Python example:

from se_utils.converter import XUnitConverter

user_junit_report = '/home/user/workspace/maven_project/target/report.xml'

converter = XUnitConverter(ut_report_path=user_junit_report)

converter.convert()Note that while you can run converter using CLI tool, the usage is limited to default path with the report file. In Python example above this path can be optionally specified.

CLI tool example:

#!/bin/bash

USER_JUNIT_REPORT_PATH="~/workspace/maven_project/target/report.xml"

# copy unit tests report to the default location

cp $USER_JUNIT_REPORT_PATH $SE_PATH_UNIT_TESTS_REPORT

se_utils_cli convert XUnitConverterGrader

The Grader utility is responsible for establishing the final result based on the final tests report produced by the

Test Result Converter. The final result is usually a compilation of the final status (e.g., OK that states for a

success), the score, and the execution time.

Similarly to the Validator and the Test Result Converter, the Grader is usually also used as a sub-utility of the

Evaluator. As with the others, it can also be used independently, if necessary.

Python example:

import shutil

from se_utils.grader import AtLeastOneTestPassedGrader

from se_utils import environment

path_custom_final_tests_report = '/home/user/workspace/custom_report.json'

shutil.copyfile(path_custom_tests_report, environment.path.final_tests_report)

grader = AtLeastOneTestPassedGrader()

grader.grade()CLI tool example:

#!/bin/bash

cp '~/workspace/custom_report.json' "$SE_PATH_FINAL_TESTS_REPORT"

se_utils_cli grade AtLeastOneTestPassedGraderScenario Result

The Scenario Result utility allows you to manage the final result of the scenario's execution.

You can use it to:

- set final parameters:

status,time, andscore, - get current final parameters:

status,time, andscore.

Python example:

from se_utils.result import ScenarioResult

# get final status to variable

result = ScenarioResult.get()

final_status = result.status

# set final status to FAIL

ScenarioResult.set(ScenarioResult.Status.FAIL)CLI tool example:

#!/bin/bash

# get final status to variable

FINAL_STATUS=$(se_utils_cli scenario_result status)

# set final status to FAIL

se_utils_cli scenario_result status FAILStage

The Stage utility allows you for accessing an outcome of particular stages, mainly init, build, run, and test.

For each supported stage, you can use it to get the following:

stdout- output data produced during the stage,- or the path to the file containing

stdoutdata,

- or the path to the file containing

stderr- error data produced during the stage,- or the path to the file containing

stderrdata,

- or the path to the file containing

exit_code- the exit code returned by the stage command,signal- the code of a signal that stopped the stage command

Python example:

from se_utils.stage import run_stage

from se_utils.result import ScenarioResult

if 'Hello Sphere Engine Containers!' in run_stage.stdout():

ScenarioResult.set_ok()

else:

ScenarioResult.set_fail()CLI tool example:

#!/bin/bash

if [ grep "Hello Sphere Engine Containers!" "$SE_PATH_STAGE_RUN_STDOUT" ]; then

se_utils_cli scenario_result status "OK"

else

se_utils_cli scenario_result status "FAIL"

fiNote that the Stage utility doesn't allow for accessing the post stage results. This is because during a scenario

execution, the post stage is the very last, so these outcomes aren't there yet.

Environment

The Environment utility provides an easy access to various constants, parameters, paths, names, URLs and more.

Python example:

from se_utils import environment

project_website_url = environment.network.remote_url(8080)

final_tests_report = environment.path.final_tests_reportCLI tool example:

#!/bin/bash

PROJECT_WEBSITE_URL=$(se_utils_cli environment remote_url 8080)

FINAL_PATH_REPORT="$SE_PATH_FINAL_TESTS_REPORT"Note that for some parameters, usage of the CLI tool is not necessary and it would be superfluous. Instead, these parameters are available through environmental variables.

Custom Webhooks

The Custom Webhooks utility provides an easy way to send a

custom webhook during the scenario execution.

Python example:

from se_utils.webhook import CustomWebhook

CustomWebhook().send()CLI tool example:

#!/bin/bash

se_utils_cli send_custom_webhookDebug

The Debug utility provides facilities and shorthands for debugging procedures. It is intended to be used by

the Content Manager for their internal purposes that should be separated from the end-user experience.

Python example:

from se_utils.debug import debug_log

debug_log('this is an internal note for Content Manager access only')CLI tool example:

#!/bin/bash

se_utils_cli debug_log "this is an internal note for Content Manager access only"

se_utils_cli debug_log < "~/workspace/my_file.txt"